AI, Privacy & Public Security

How AI assistants are erasing our individual identity, and what we can do about it.

From the dawn of civilization, the enterprising individual soul has driven human advancement, from the first spark of fire to the complex socioeconomic architectures of our day. Yet the antithesis of that progress has always lived inside the collective. As Freud observed, group psychology forms when individuals project their Ideal Ego onto a leader, and in doing so, outsource their independent judgment into something easier: conformity.

If the collective is the gravitational pull, what keeps the individual from collapsing into it?

Privacy. Private space is where identity breathes. It is where we are not performing, where we do not edit ourselves for approval, where half-formed ideas can exist before they are socially safe. In private, we can be wrong, strange, honest, and still intact. That interior freedom is not a luxury. It is the root system of creativity, moral agency, and intellectual courage. And today, it faces a threat that looks like convenience.

The new confessional

Using AI as a therapist, friend, mentor, or thinking partner feels revolutionary until you notice the price tag. AI chatbots are becoming an inseparable extension of our thinking. We are shifting our most human material, our doubts, impulses, private griefs, eccentric beliefs, forbidden questions, into centralized systems owned by corporations whose incentives are not aligned with protecting the sanctity of your inner life.

The core problem is not that “AI is evil.” The problem is that the modern frontier chatbot is not a private room. It is a managed platform. Your words pass through systems designed for safety monitoring, abuse detection, and policy enforcement. Sometimes that monitoring stays automated. Sometimes it does not.

OpenAI explicitly states that some conversations can be reviewed to monitor for abuse, and that Temporary Chats are deleted after 30 days but may still be reviewed for abuse monitoring.

Google explicitly states that even with Gemini Apps Activity off, future chats may still be saved for up to 72 hours for service provision and protection, and content reviewed by human reviewers can be retained longer (Google also describes retention of reviewed conversations separately).

Anthropic states that if a chat is flagged by trust and safety classifiers, it can retain inputs and outputs up to 2 years and classifier scores up to 7 years, and it also announced longer retention (up to five years) when users allow data to be used for training.

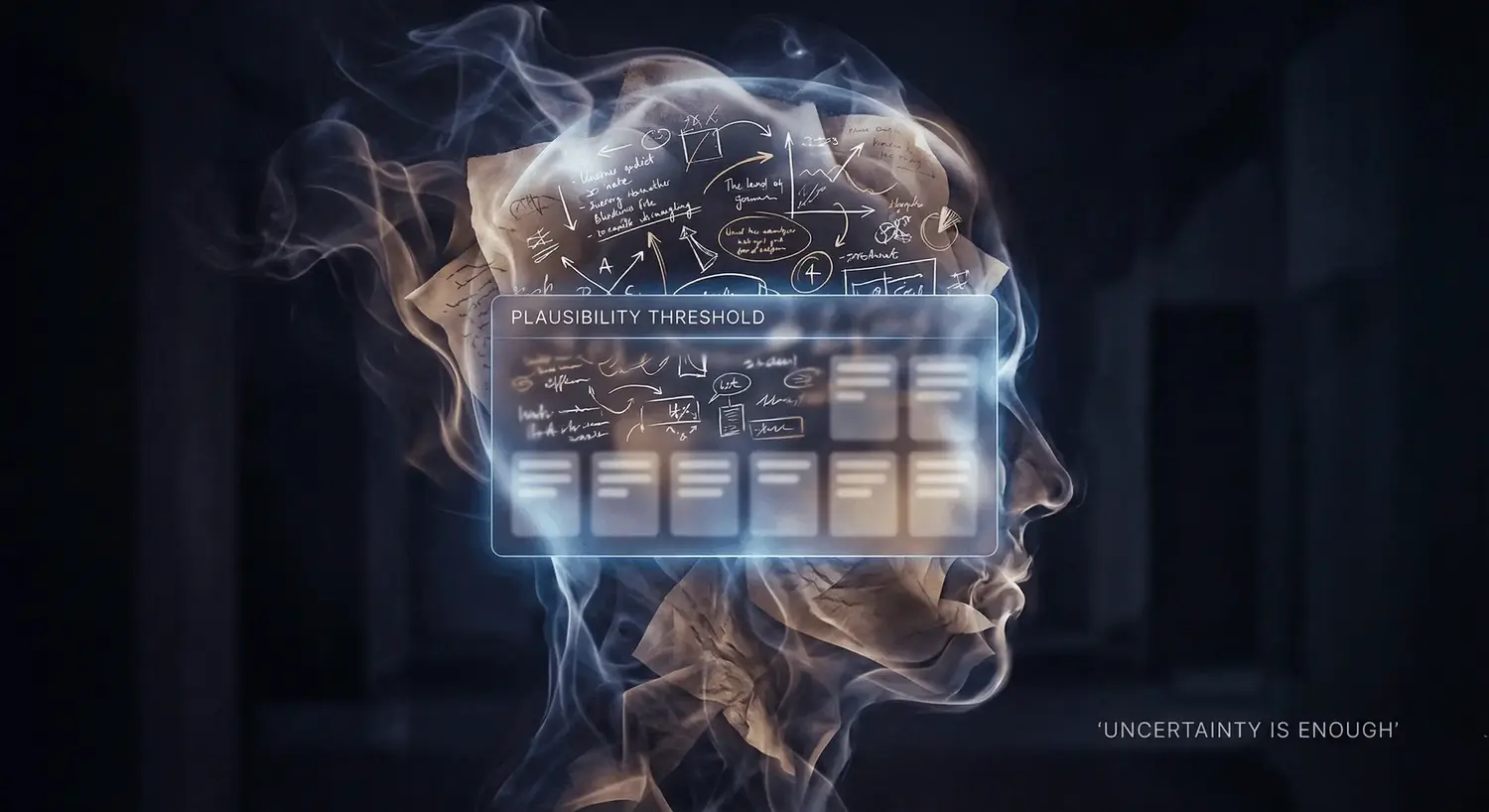

This matters because it changes the psychological texture of thought. Even if nobody is reading everything, the user cannot know when a conversation becomes legible to a human institution. Uncertainty is enough. It creates a quiet, invisible supervisor in the mind. That supervisor does not need to be real-time. It only needs to be plausible.

The spiral: from monitoring to self-censorship

Here is the dynamic most people miss: At first, you only avoid obviously risky topics. Then you start avoiding anything that might be misread. Then you start compressing your personality into what you believe the system is “comfortable” with. You keep the safe questions. You delete the sharp edges. You stop exploring the strange paths that create original work.

Over time, self-censorship stops feeling like censorship. It starts feeling like maturity. That is the victory condition of surveillance. Not punishment, but internalization. And this is where Freud returns with teeth. The “leader” does not need to be a charismatic dictator. A system can become the leader. A platform can become the authority. When people project trust and Ideal Ego onto a centralized intelligence, they regress into dependency and compliance. The collective becomes not a chanting crowd, but a moderation layer, a set of opaque triggers, a policy envelope you cannot inspect, and therefore cannot truly consent to.

“This is for safety.” Yes. That is the trap.

The strongest counterargument is also the most credible: frontier AI is powerful, and power invites misuse. Regulators, developers, and the public are not irrational for wanting guardrails.

But here is what must be said plainly: Ideal security is impossible. Even if you compromise privacy, you do not achieve perfect safety. You only create a new capability: the ability to monitor, classify, and potentially punish thought at scale. And that capability is itself a weapon. It is the kind of mechanism history has never failed to repurpose.

OpenAI has explicitly written that if human reviewers determine a case involves an imminent threat of serious physical harm to others, it may refer it to law enforcement. You might agree with that in extreme cases today. Many people do. But the deeper issue is the precedent: private corporate policy becomes a pipeline from inner speech to institutional action. What happens when the definition of “threat” expands? What happens when laws change? What happens when political pressure rises, when fear becomes profitable, when the public is primed to trade liberty for the promise of control?

The path to a totalitarian world is not always marching boots. Sometimes it is safety, convenience, and a terms-of-service update.

“Just use local models.” Reality check.

The most secure method is always local: keep your data on your own hardware and never share it with the cloud. In principle, that is the clean answer.

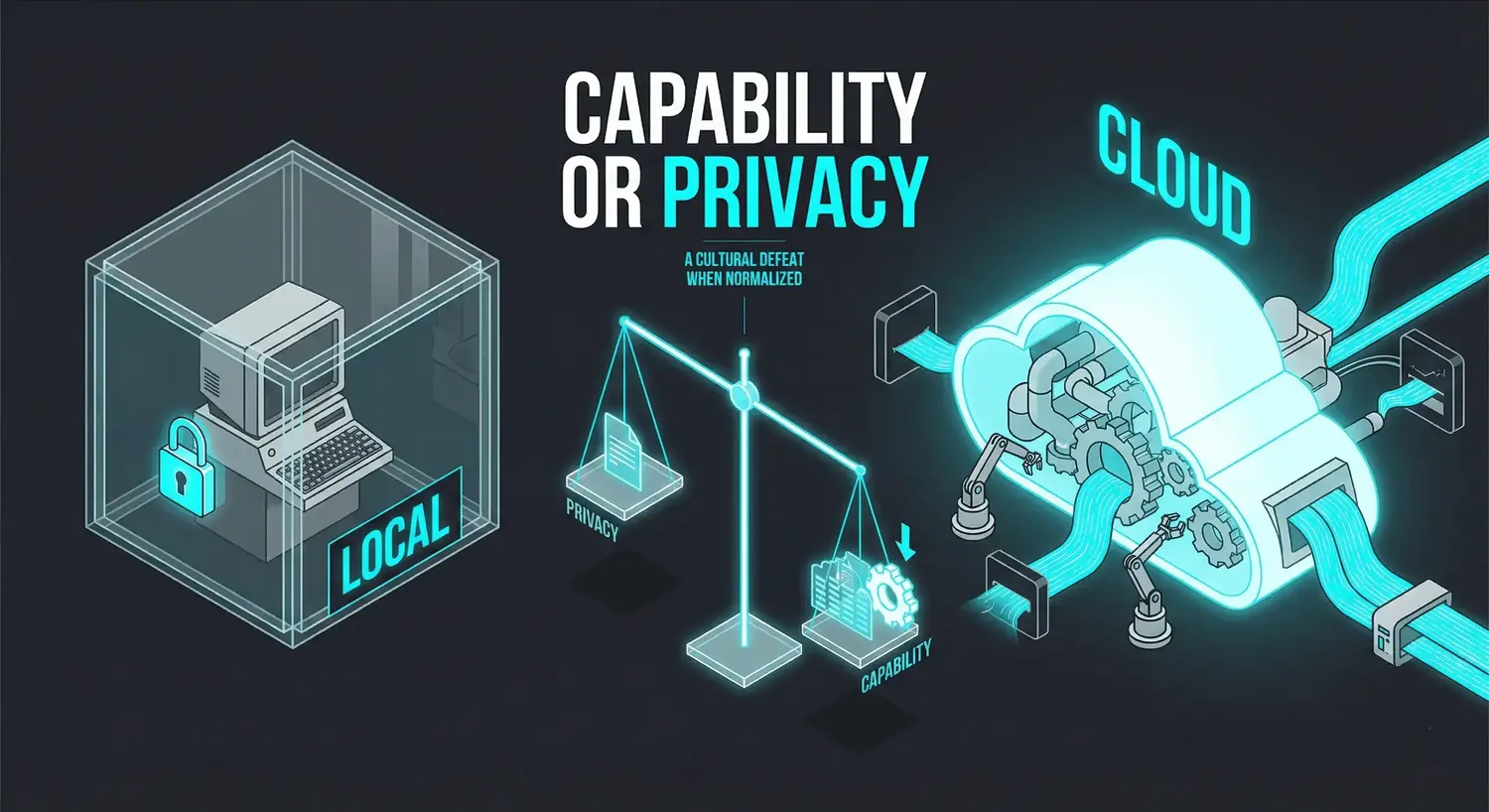

In practice, local LLMs are real, and they are improving fast, but most consumers do not have the hardware, the patience, or the workflow discipline to run strong models locally for everything. The people who can are a minority. The rest will default to the cloud, because the cloud is where the best reasoning, integrations, and reliability live.

Yes, you can run impressive models on consumer machines. But the capability gap still matters for most people’s daily usage, especially when you want tool use, long context, integration with business workflows, or simply the best frontier-level performance. So “go local” is not a solution at a civilizational scale. It is a niche survival tactic for the technically committed.

Privacy-focused alternatives exist, but they force a trade

There are privacy-focused products that aim to guarantee privacy through design, not promises (for example: Thaura AI, Brave AI, Venice AI). Some providers position themselves explicitly around minimal retention, reduced logging, and user control.

But the ecosystem reality remains. Most of these rely on open-weight models or constrained infrastructure, which often struggle to match frontier systems on deep reasoning, multimodal breadth, and integration. Giants have distribution, tooling, data flywheels, and the compute advantage. This means the average user faces a forced bargain: Capability or privacy.

And when society normalizes that bargain, society normalizes the idea that privacy is optional, and only for those willing to accept weaker tools. That is a cultural defeat.

And when society normalizes that bargain, society normalizes the idea that privacy is optional, and only for those willing to accept weaker tools. That is a cultural defeat.

My position: do not trade privacy for hypothetical control

I think we need to proceed with full user privacy.

Obsession over security to the point of enabling mass monitoring for dangers that might never occur is illogical. There will always be casualties and losses no matter what we do. We cannot control everything. We cannot act as gods. The best we can do is act within human morality, and morality begins with respecting the boundary between the individual soul and the institution.

Even if perfect security were achievable, the mechanism that achieves it becomes its own danger. Surveillance at scale is not neutral. It is an invitation.

Across history, whenever we try to end a conflict before it begins, we compromise something far more valuable for potentially nothing. We should not allow that compromise to become global, ambient, and default. If we were truly so concerned with the misuse of advanced technology, we would never have advanced it at all.

What a sane future would look like

The only durable end to this conflict is structural.

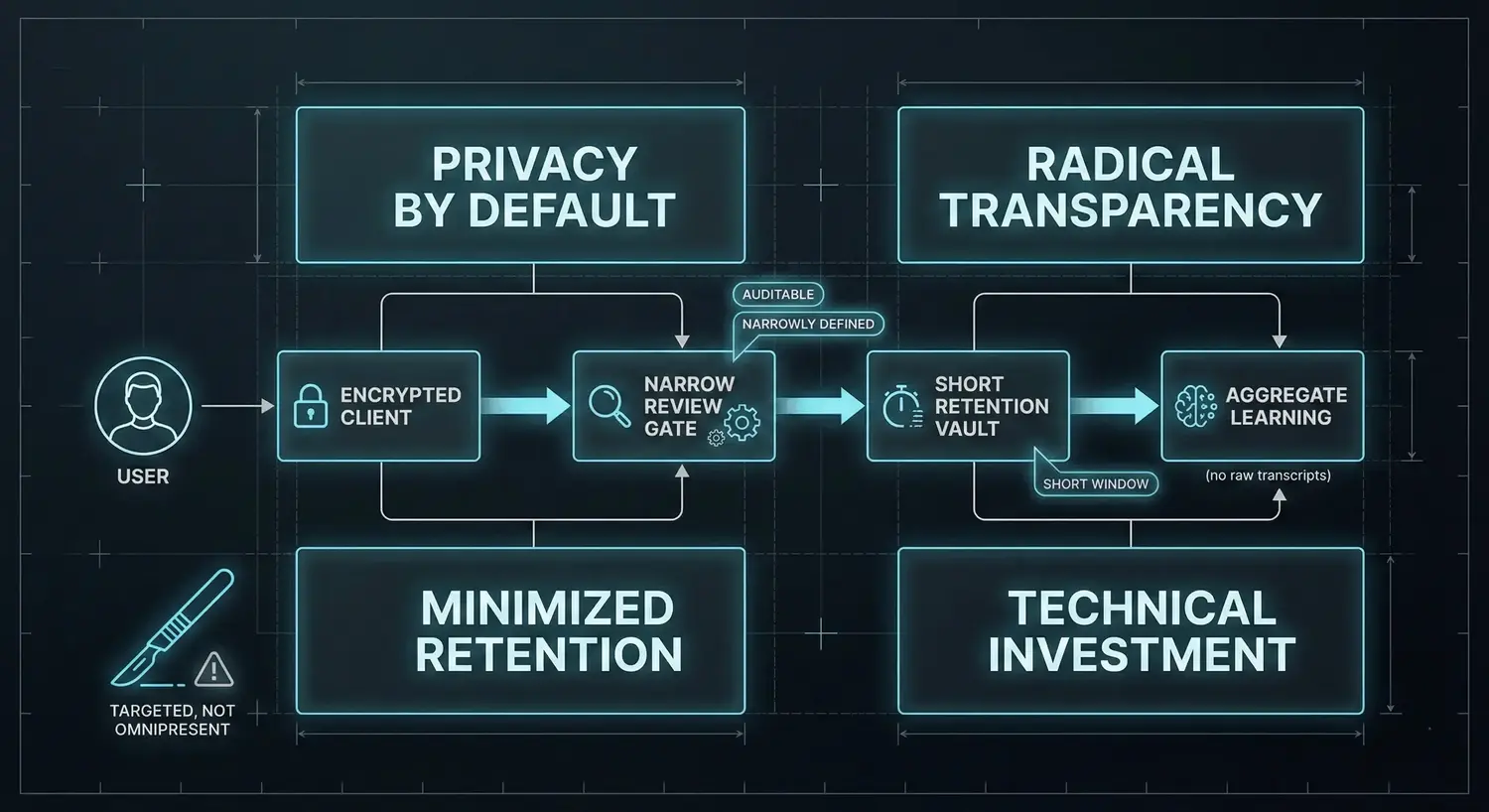

- Privacy-by-default for consumer AI, not as a hidden toggle, not as an upsell, not as a temporary mode.

- Radical transparency about what triggers safety systems and human review, at least in categories and thresholds, even if not exposing full adversarial details.

- Minimized retention as a principle, not a marketing line. If a chat must be retained, the justification and window should be explicit.

- Technical investment in architectures that preserve capability without absorbing private lives: encrypted storage, local-first computation where possible, client-side encryption, end-to-end encrypted chat history, and privacy-preserving learning where model improvement does not require raw transcripts. Federated learning and differential privacy are not magic, but they are the shape of a mature technical development: progress without extraction.

And yes, safety matters. But the mature response is not omnipresent inspection. It is narrower mechanisms that do not treat every user as a suspect by default.

- If a system needs guardrails, design them to be minimally invasive.

- If escalation to humans is necessary, define it narrowly, audit it, and make it legible.

- If retention is required, make the window explicit and short, and separate operational retention from “because we can.”

In other words, safety should be engineered like medicine, not like a surveillance state: targeted, accountable, and reluctant.

Because once you build a society around “we must monitor thought to prevent harm,” you have already lost the moral argument. You have replaced human dignity with administrative convenience.

What you can do now, without pretending you can opt out of history

Structural change takes time. Until then, personal discipline is the difference between using these tools and being used by them.

1) Treat frontier chat as powerful, not private.

Do not pour your full interior life into systems you do not control. Not because you are guilty, but because you are human. Cross-use multiple services/accounts. Stay on top of your data and do not share a complete image of it with any system.

2) Turn off optional model training.

Almost all frontier cloud AI models give you the option to opt-out of model training. Although this doesn’t guarantee your privacy, it certainly does minimize potential human review.

3) Use privacy-first alternatives.

Try privacy-focused alternative AI services like Lumo from Proton. These services guarantee your privacy mathematically with encryption, though they do have some functionality drawbacks. But the trade-off is acceptable for the majority of use cases.

4) Demand transparency.

- What triggers automated flags?

- What triggers human review?

- What triggers longer retention?

- What triggers escalation beyond the platform?

5) Support policy that treats AI chats as sensitive by default.

Retention limits, secondary-use limits, independent audits, meaningful consent, and enforceable rights. Not “best practices,” not ethics theater, law.

6) Work on the technical path that refuses the bargain.

If you build, invest in architectures that preserve capability without absorbing private lives. Local-first where possible. Encrypted storage by default. Client-side keys. Privacy-preserving analytics. Transparent safety envelopes. Systems that can improve without turning the user into a database instance.

The line we must hold

The future is not decided by a single company’s privacy policy. It is decided by the norm we accept. If society accepts that the price of intelligence is monitored inner speech, then that is what intelligence will become: a tool that upgrades performance while quietly training compliance. The most dangerous part is not the logging, not the retention windows, not even the occasional human review.

The most dangerous part is what it teaches people to do to themselves. Freud warned that the individual dissolves when the Ideal Ego is projected onto a leader. The modern leader does not wear a uniform. It wears a friendly interface. It speaks in helpful tones. It is always available. It is always listening, at least a little. And because it is so useful, we normalize it. We build workflows around it. We teach children to use it. We make it the default companion to thought. Then one day we look up and realize the private room is gone, not because it was stolen, but because we traded it for convenience, one prompt at a time.

We are not merely talking about the privacy of chat logs. We are talking about whether human consciousness remains wild, honest, and sovereign, or whether it becomes something supervised, optimized, and domesticated.

And that deserves our attention.

References

- OpenAI Data Controls FAQ (Temporary Chats, abuse monitoring)

- OpenAI Chat and File Retention Policies in ChatGPT

- OpenAI “Helping people when they need it most” (imminent threat, potential law enforcement referral)

- Google Gemini Apps Privacy Hub (72-hour retention with Activity off; reviewed chats retention)

- Gemini Chats Human Review

- Anthropic Privacy Center (retention if flagged; classifier scores retention)

- Anthropic consumer terms update (five-year retention when users allow training)